R is fast at processing data sets, but it is limited by memory (physical RAM plus swap). SAS processes much larger data sets, but it is slow because it keeps the data sets on the hard drive. Although computer processors have become faster and memory larger, hard drive performance hasn’t changed much: disk I/O is still an “expensive” operation, meaning it is slow. One easy way to make SAS faster is by reducing disk I/O by compressing data sets.

Compression of SAS data sets is seamless: once created, the compressed data sets can be opened, read, and modified normally without any extra code, options, or steps. Compression can be turned on as a system option (affecting all SAS data sets), on a LIBNAME statement, and on individual data sets. I generally recommend turning it on as a system option.

Data set compression helps the most on data sets with missing and short numerics and character values with unused and variable space. Say for example you have a field called name, and its width is 60. The first observation has a field Bob (three characters): that means 57 are blank and wasted. SAS still writes all 60 characters each time: this wastes disk space and time.

If a data set has only a few rows, it may not be worth your time tuning its performance. For example, the difference between 100 KB and 10KB (a 90% reduction) is only 90KB, which is not much nowadays.

How much time and disk space you can save may surprise you! I first learned the importance of disk performance when benchmarking the OpenOffice.org application where I noticed a dramatic difference between cold starts and warm starts. In the case of SAS, compression saves SAS the expensive effort of writing all the data to the disk. Unlike using an external compressor (like ZIP), the first time SAS writes the data to the disk, it is already compressed (so it isn’t written uncompressed, read back to the compressor, and written again compressed). People buy computers with faster RAM and CPUs, but often in computers the hard disk is the bottleneck. Unfortunately disk performance hasn’t improved much over the years (except for solid state storage devices, which are still rare today). Stepping outside of Computer Science for a moment, Eliyahu Goldratt’s book “The Goal” teaches us first to identify the bottleneck and then build the process around it (even if the extra work seems expensive).

Let’s start by creating a test data set with compression enabled:

/* this data set option enables compression */ data fast (compress=yes); set /* this data set happens to be large (21MB) and contains several character strings */ sashelp.zipcode sashelp.zipcode /* repeat the data set to make a larger result, which is easier to benchmark */ sashelp.zipcode sashelp.zipcode sashelp.zipcode; run;

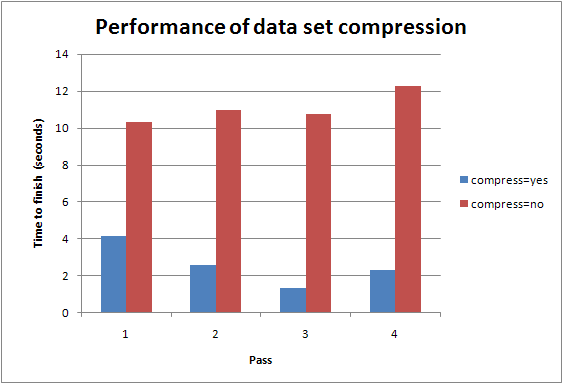

Repeat this code a few times, and watch the speed. (It will vary the most between the first and second pass because of cache on the hard drive and in the operating system.) On my system compression here saves 77% of the disk space and finishes in 4.15, 2.61, 1.35, and 2.34 seconds.

Now try it with compression disabled:

/* explicitly disable compression in case it was enabled by a system option */ data slow (compress=no); set sashelp.zipcode sashelp.zipcode sashelp.zipcode sashelp.zipcode sashelp.zipcode; run;

Repeat this code a few times. On my system it finishes in 10.31, 11.01, 10.76, and 12.29 seconds. Compression improved the speed 76%, which you may notice is nearly the same difference as the disk space (77%).

Data set compression works with PROC SQL too:

proc sql; create table fast(compress=yes) as select * from sashelp.zipcode; quit;

If not enabled as a system or library-level option, you must repeat the data set option each time you do a DATA step:

/* Did you know here SAS will re-write the whole data set rather than modifying it? */ data fast (compress=yes); set fast; x = 1; run;

To enable compression for all SAS data sets, put this system option in your SAS autoexec file:

option compress=yes;

Also, experiment with the benefits of compress=binary which has a more advanced compression.

In a few cases, compression may slightly inflate the data set (and hurt performance). This is common in data sets with few variables and all the variables are numeric. When this happens, disable compression for those specific data sets or system wide. In the log look for notes like this:

NOTE: Compressing data set WORK.NUMBERS increased size by 33.33 percent.

In some cases with compress=yes (but not compress=binary), SAS automatically disables compression.

NOTE: Compression was disabled for data set WORK.RESPONSE because compression overhead would increase the size of the data set.

Using compression increases CPU usage, but when using SAS on most modern systems, the CPU is usually not the bottleneck: the hard drive is the bottleneck. In other words, it’s strategically beneficial to trade excess CPU resources for scarce disk I/O resources. Verify the tradeoff yourself by using Process Explorer (or similar tool): you should see the CPU is rarely at 100%. Another way to verify the benefit is to simply look at the duration of the DATA step or procedure (as done above).

A related article: Date and datetime: accuracy vs storage and I/O costs

Makes me wonder why SAS didn’t make compress=yes as a default option.

This was super helpful, I run regular processes that were taking around 15 minutes that now take around 7. I did a similar test on proc sort and processing time gets cut in half when using compression. Thanks!

@Vishal: until recently the price to pay was high CPU load. Now CPUs are faster and cheaper, so it makes sense to use this option more often. But not always.

@Andrew: “Data set compression helps the most on data sets with many rows and with variable-sized character variables.” No, data set compression helps the most on data sets with many *variables*, as each observation is compressed separately. The longer the observation (more variables, especially missing numerics and unused character spaces, the higher the compression ratio). The number of rows has no impact as each row is compressed independently from others. This choice was made so one can still une indexes and point= on compressed datasets. For datasets with mostly numeric values, use compress=binary (which is more CPU-intensive).

Yes, you are right about the rows. I will fix the article. I knew that, so I am not sure why I wrote it the wrong way. 🙂

By the way, I haven’t had time to write about it yet, but lately I have been using LENGTH as a supplement or replacement for compression. By default SAS allocates 8 bytes for numeric variables, but many can be represented with a few as 3 bytes. On many of my data sets LENGTH works better than COMPRESS—especially on data sets where I can set a length of 3 on most numeric variables. Also sometimes now I use a character of length 1 (for representing a binary outcome or something with similarly few values) instead of a small numeric.

Now I remember what I meant about the rows, so I clarified the article. I mean if there are few rows, that implies the data set is small (say, 100KB), so it is not practically worth tuning because it won’t make much practical difference in terms of speed or size on today’s computers. But for data sets with millions of rows, a small percentage improvement in size may translate to savings of minutes of processing time—this is a worthwhile investment.

Morning Andrew, I have a question here: How should I disable SAS from issuing the following note :

NOTE: Compressing data set Big_big_big_file decreased size by 15.52 percent.

Compressed is 7658742 pages; un-compressed would require 8266585 pages.

I turned on the compression option at system level and my SAS code has hundreds of datasteps and procs. I ran the batch at night and in the morning when I checked the Log, it is full of the notes about “compressed is … uncompressed is….” which drove me nuts.

I fully believe SAS does an terrific job compressing the datasets but there’s no need telling me this 1 thousand times every morning…hhaha.

Any suggestion is highly appreciated !

There may be some SAS option to filter the log, but I don’t know.

I also have long programs that display this message many times, but I just ignore it. I suppose you could write a SAS program to parse the log and filter the messages.

Thanks Andrew for your quick response. Yes I agree I could ‘parse/filter the SAS log’ theoretically – however the log and output are considered ‘proof’ and are going to be presented to clients and thus ‘untouchable’ in this sense. And it’s really annoying the compression message takes up 1/3 of the log, especially when every morning I’m focusing on the row counts of each new dataset created last night by reading hundreds pages of logs.

Anyway I’ll check with SAS to see if there’s any option to disable the log message while keeping the compress= option active.

Here is my follow_up : this feature is the “most wanted” feature voted in a 2009 Ballot ! “.

“provide an option that enables you to turn off or on certain notes that are

written to the SAS log; for example, notes about data set compression,invalid data, or invalid argument to a function” ranks NUMBER_ONE in the following paper :

Click to access Ballot09.pdf

Pingback: CAS data modeling for performance - SAS Users

Pingback: CAS data modeling for performance – Cloud Data Architect