Artificial neural networks are commonly thought to be used just for classification because of the relationship to logistic regression: neural networks typically use a logistic activation function and output values from 0 to 1 like logistic regression. However, the worth of neural networks to model complex, non-linear hypothesis is desirable for many real world problems—including regression—so can they be used for regression? Indeed, and the first example of neural networks in the book “Data Mining Techniques: Second Edition” by Berry and Linoff is estimating the value of a house.

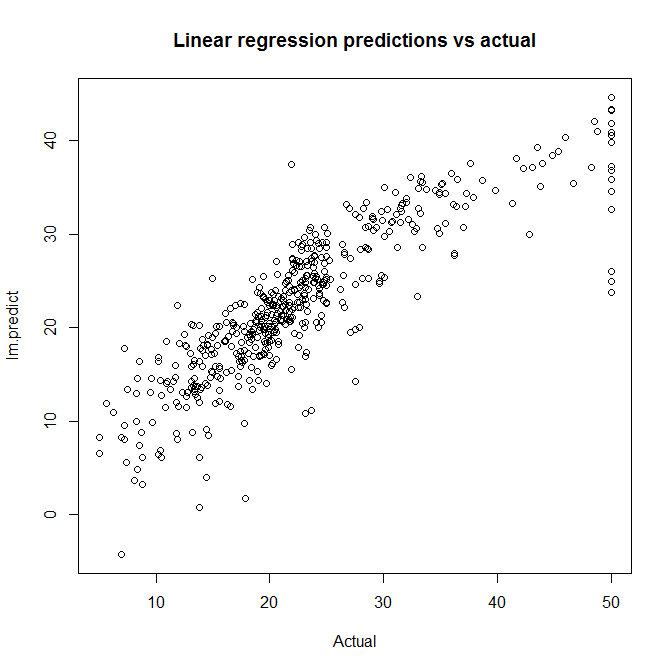

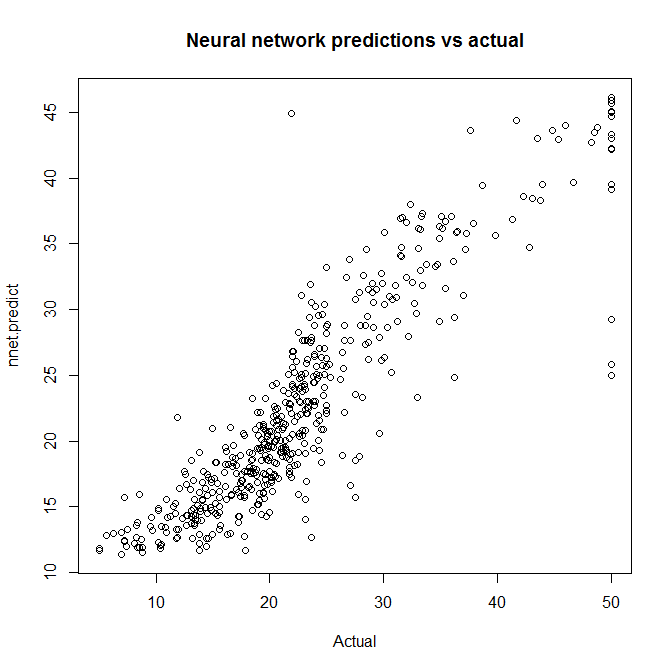

Using standard libraries built into R, this article gives a brief example of regression with neural networks and comparison with multivariate linear regression. The data set is housing data for 506 census tracts of Boston from the 1970 census, and the goal is to predict median value of owner-occupied homes (USD 1000’s).

### ### prepare data ### library(mlbench) data(BostonHousing) # inspect the range which is 1-50 summary(BostonHousing$medv) ## ## model linear regression ## lm.fit <- lm(medv ~ ., data=BostonHousing) lm.predict <- predict(lm.fit) # mean squared error: 21.89483 mean((lm.predict - BostonHousing$medv)^2) plot(BostonHousing$medv, lm.predict, main="Linear regression predictions vs actual", xlab="Actual") ## ## model neural network ## require(nnet) # scale inputs: divide by 50 to get 0-1 range nnet.fit <- nnet(medv/50 ~ ., data=BostonHousing, size=2) # multiply 50 to restore original scale nnet.predict <- predict(nnet.fit)*50 # mean squared error: 16.40581 mean((nnet.predict - BostonHousing$medv)^2) plot(BostonHousing$medv, nnet.predict, main="Neural network predictions vs actual", xlab="Actual")

Now, let’s use the function train() from the package caret to optimize the neural network hyperparameters decay and size, Also, caret performs resampling to give a better estimate of the error. In this case we scale linear regression by the same value, so the error statistics are directly comparable.

> library(mlbench) > data(BostonHousing) > > require(caret) > > mygrid <- expand.grid(.decay=c(0.5, 0.1), .size=c(4,5,6)) > nnetfit <- train(medv/50 ~ ., data=BostonHousing, method="nnet", maxit=1000, tuneGrid=mygrid, trace=F) > print(nnetfit) 506 samples 13 predictors No pre-processing Resampling: Bootstrap (25 reps) Summary of sample sizes: 506, 506, 506, 506, 506, 506, ... Resampling results across tuning parameters: size decay RMSE Rsquared RMSE SD Rsquared SD 4 0.1 0.0852 0.785 0.00863 0.0406 4 0.5 0.0923 0.753 0.00891 0.0436 5 0.1 0.0836 0.792 0.00829 0.0396 5 0.5 0.0899 0.765 0.00858 0.0399 6 0.1 0.0835 0.793 0.00804 0.0318 6 0.5 0.0895 0.768 0.00789 0.0344 RMSE was used to select the optimal model using the smallest value. The final values used for the model were size = 6 and decay = 0.1. > > lmfit <- train(medv/50 ~ ., data=BostonHousing, method="lm") > print(lmfit) 506 samples 13 predictors No pre-processing Resampling: Bootstrap (25 reps) Summary of sample sizes: 506, 506, 506, 506, 506, 506, ... Resampling results RMSE Rsquared RMSE SD Rsquared SD 0.0994 0.703 0.00741 0.0389

A tuned neural network has a RMSE of 0.0835 compared to linear regression’s RMSE of 0.0994.

I reran this code without changing anything and the neural net fit very poorly (most of the predictions at 0 or 1). I wouldn’t think the default settings would differ from computer to computer.

Actually, I reran it many times and sometimes it was really good, sometimes horrible (all equal to mean). I increased the maxit to 1000 and that helped, but there were still some really bad estimations. It seems that some of the nnets were converging after 1 iteration and those were the really bad ones.

Out of necessity, neural networks initialize the weights to random values, so this explains the inconsistency. Also, optimization process that tunes the weights can find local minimums, which is apparently what you found. I’ve heard the local minimums are not a problem in practice, but apparently it can be an issue. For this article I just used the first model. Interesting.

Well if this is the situation, isn’t it a severe drawback of NNets? I mean if I am training the model or a very large data set, I would expect the results to be stable and not vary much on another sample of the same data set. The fluctuations in the predictions could be very misleading then.

I really don’t know much theory behind them, but as heuresticandrew pointed out that this is the first model. Are there ways that the predictions can be improved in terms of accuracy and stability with the same procedure?

No setting seed

This is seems interesting. I haven’t used much of neural networks. Will definitely try this out.

One question, why has the dependent variable been divided by 50. I mean is it necessary that the dependent variable be in the 0 – 1 range?

Thanks

Exactly. With a logistic activation function, a neural network always outputs 0 to 1. Less often used, there are other activation functions such as hyperbolic tangent which is -1 to 1. In any case, we can adapt to this limitation using scaling.

Where did you get 50 for scaling the original dependent variable for this dataset? Is there some function we can use to scale all original values from 0 to 1 instead of having a divisor of 50?

I used 50 because that was the range for the variable. To rescale all variables, look at the pre-processing functionality in the caret package.

Another question, where have you specified the logistic activation function? Is it by any chance the default in nnet()?

You are right, I didn’t specify the activation function. Logistic is the default for nnet() which I verified by comparing nnet()’s outputs to a copy of the same network computed in SAS and OpenOffice.org

I’m going to check into the option ‘linout’ which is labeled “switch for linear output units”

Well, the training algorithm built in R is not very efficient. In this case you can add weight decay (setting the parameters ‘decay’ with a value greater than zero) and the algorithm doesn’t get stuck in local minima. However, nnet algorithm is seems quite naive but I suppose there are other packages with more advanced methods.

Pingback: Data is everywhere! « Stats raving mad

What’s the point in testing on training set? It’s clear from the image that neural network overfits and will not generalize on unknown data. Try to separate training and testing set and you’ll probably get less impressive results.

There are several options to reduce overfitting. In general, reducing the number of units in the hidden layer or adding training examples can help, but that is not possible in this example. However, it is still possible to increase the

decayvalue or remove some features. I would start by varying decay over something like 0, 0.0001, 0.001, 0.01, 0.1.Well, try reducing the number of hidden layers and the overfitting issue will go away

Hello,

I didn’t try nnet package yet but rather wrote the Rcpp gluecode between Shark Machine Leaning library and R to create more flexible setup. According to prof. Hinton (UFT) there is a bag of tricks to train a well designed network; but even putting strutural changes aside a possible approach to avoid over fitting is to monitor bias/variance and do early stopping during training phase.

Training deep networks is an art; it is a good idea to start with linear — some sigmoid — linear setup, as during the training phase the backpropagated error values either explode or die off crippling the network. Using hessian free optimiser may help — haven’t try it yet as c++ implementation needed to convert J Martens (UFT) hessian free optimizer; also the above mentioned scaling helps the optimizer work a lot as well.

Fall 2012 I took Geoffrey Hinton’s Neural Networks for Machine Learning on Coursera, and since then, I’ve wanted a way to train these modern kind of neural networks in R to practice the art you are writing about. It’s remarkably hard, for example, to find implementations of dropout whether in open source or commercial software: many seem about a decade behind the state of the art. In R, there is not even an implementation of neural networks with momentum, which I think has been around for two decades.

Did you try Shark Machine learning library? it has state of the art features and great software architechture. I was able to reproduce the results of randomly picked datasets from UCI Machine Learning Repository.

Steven, I took a quick look at the Shark web page, and Shark seems to be missing dropout for neural networks. If I were going to try another neural network library, I would really want dropout, which Hinton claims makes a large difference in performance. So far I have only found one implementation of neural networks with dropout, which is for Matlab/Octave. At this time I am focusing my effort on gradient boosting as found in the GBM package, but eventually I want to return to neural networks.

I suppose you mean this article: Improving Neural Networks with Dropout by Nitish Srivastava? of course would be great to have that functionality out of box as well as James Martens and Ilya Sutskever hessian free optimizer. I plan to implement the latter and will look into what is the easiest way to remove nodes during training phase from FFnet object. So far void setStructure (std::size_t in, std::size_t out, IntMatrix const &cmat) seems to be a way to go, but need to clarify this with the original developers.

I learned about dropout in Hinton’s class on Coursera, but I think this is the paper for it: “Improving neural networks by preventing co-adaptation of feature detectors” by Geoffrey E. Hinton, Nitish Srivastava, Alex Krizhevsky, Ilya Sutskever, Ruslan R. Salakhutdinov.

The implementation of Dropout in DeepLearnToolbox (for Matlab/Octave) looks very simple (less than 10 lines of code).

whats the activation function used in NN for regression

In this example I used logistic, but logistic isn’t necessarily the best activation function for this or other cases

Hi, I was wondering if a neural network weight without hidden layers and logistic activation function and a logistic regression parameters are the same?. Can we build a neural network without hidden layers with nnet? Thanx

zzztilt, yes, I think they are equivalent. No, the “nnet” package does not allow disabling the hidden layer (without using skip layer connections), but you may look at the other R neural network packages such as neuralnet and RSNNS.

Is it possible train a NN with more than one outcome parameter at a time using caret package?

Alvaro, if you are trying to multinomial classification with caret, it looks like you can use the “multinom” method from the “nnet” package. (I never tried it, though.)

Interesting use of nnet for regression.

Please change the lines where u code the gender by numbers to what is shown below.

Otherwise, R would throw an error:

Error in `[<-.data.frame`(`*tmp*`, Sex == "M", 1, value = "1") : object 'Sex' not found

data[data$Sex == "M",1] <- "1"

data[data$Sex == "F",1] <- "2"

data[data$Sex == "I",1] <- "0"

Can the accuracy of nnet be increased by increasing the no. of nodes/increasing the no. of iterations,increasing the no. of predictors by bringing in more variables, or doing all of these?

I have no clue of nnet theory- so, my apologies if this is a very basic question.

In machine learning, there is a science to balancing the complexity of the model (e.g., number of predictors and nodes and layers in a neural network) with the amount of signal in the data. If the model is too complex, the model learns the noise and overfits the data. If the model is too simple, it underfits the data.

Sir my question is that what is the purpose of linear regression in neural network and why we used supervised training ?

2) kindly tell the steps of neural network training ,how they train etc (if possible kindly explain with flow diagram)?

how to install the library? Also can anyone suggest a library on MATLAB?

Aniket, the nnet is already installed on R, so it does not need to be installed.

Hi,

whenever I try to run the neuralnet, as mean((nnet.predict – BostonHousing$medv)^2) I get 84.41956 , and in the plot I only see a fixed value that the model predicts;

How Could I solve it?

Thanks